How Headphones are Changing Music Production

Quick Answer

The use of headphones instead of traditional speakers is drastically changing how music is being listened to, recorded, mixed and mastered. The bulk of these changes results from the lack of room acoustics associated with headphone use, but many other important factors come into play.

How Headphones are Changing Music Mastering and Production

How we are listening to music is changing. The popularization of headphones, typically in the form of earbuds, is drastically changing how music is made and listened to.

Music is becoming an increasingly isolated event - no longer are groups gathering to listen to a record in a living room. Instead, we each own a pair of small portable speakers that are constantly increasing in quality and decreasing in cost.

Headphones are becoming increasingly popular, begging the question, "How does this affect music production?"

This solidarity affects how music is interpreted emotionally, and in turn, changes the production - but instead of focusing on the psychological effects of isolated listening experiences (something with is by no means my area of expertise) let’s discuss how the increasingly popular use of headphones is changing how music is produced.

The popularity of headphones is slowly but surely changing music production.

Primarily, we’ll be discussing how it affects the mastering process - but we will be detailing some specific ways in which it’s changing mixing and tracking as well.

Additionally, we’ll be looking into what about mastering isn’t changing due to headphone use, at least not anytime soon.

Lastly, we’ll be covering the future of headphone use, what this means for mastering, and how mastering engineers will need to adapt their processing for the emerging technology.

But first and foremost, let’s talk about the technical differences between music played through headphones and music played over loudspeakers. By understanding these differences, we can begin to comprehend why all of the other changes are needed and perhaps, inevitable.

If at any point you’d like to hear your music mastered, send us a mix here:

We’ll master it for you and send you a free mastered sample.

The Technical Differences Between Headphones and Loudspeakers

Simply put, loudspeakers are larger and produce sound into an acoustic space; headphones are much smaller and produce music directly into the ear, not an acoustic space. These two characteristics have immense technical implications for how the source material can be perceived due to acoustical physics.

Reverberation

When music is played using loudspeakers, the acoustical energy or sound waves is dispersed into a room - the sound waves reflect against all surfaces in the room, which each reflection altering the overall sound.

The room into which music is played affects the sound of that music.

This means that the material used in the room, the size of the room, shape of the room, amount of people in the room, and so on, will in turn change how music from a loudspeaker sounds.

Everything inside of a room affects how music reverberates.

This is why throughout history music has been greatly shaped by the room in which it is played.

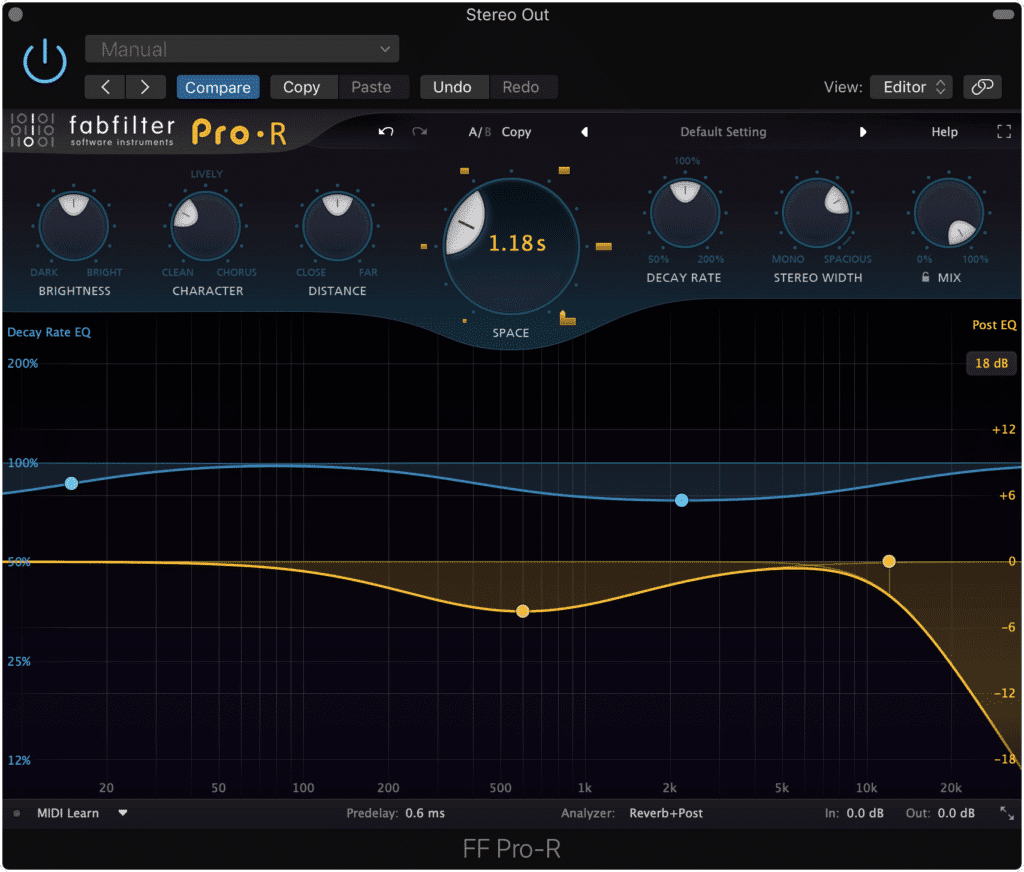

For example, romantic classical music was typically performed in concert halls with reverb times of 1.8 to 2.2 seconds. This amount of reverb dictates how much instrumentation can be played simultaneously before it begins the sound cacophonous (hence the more simplistic orchestration of romantic classical music).

Classical music halls are designed with reverb times in mind.

Another example of this phenomenon can be recognized when measuring the current reverb times of modern pop and rock halls. Most well-liked concert halls have reverberation times between .6 to 1.2 seconds, with lower reverberation times between the frequencies of 63Hz to 125Hz. This shorter reverb time allows for louder and more complex instrumentation.

Rock and pop venues have shorter reverb times to accommodate louder instrumentation.

In case you’re curious, here are some reverb times that are closely associated with, and developed in tandem with, various genres in music’s history:

- Organ Music: Greater than 2.5 Seconds

- Romantic Classical Music: 1.8 to 2.2 Seconds

- Early Classical Music: 1.6 to 1.8 Seconds

- Opera: 1.3 to 1.8 Seconds

- Chamber Music: 1.4 to 1.7 Seconds

- Theatre: 0.7 to 1.0 Seconds

This is why audio aficionados of the past spent a lot of effort and time to create a good sounding listening room for their music. At the time, both the amplification system and the room played a role in crafting an enjoyable sound.

Today, things are completely different - the room doesn’t play a role. The music is played directly into the ear, completely bypassing the sound of a room and all of the acoustics we just discussed.

Listening with earbuds completely negates the effect of room acoustics.

As you can imagine, this greatly alters the sound of a sound source, and in turn, affects how the music is created.

But before we get into that, let’s discuss why the speaker size and position is also an important part of how headphones are changing music.

Speaker Size

The diameter of a speaker, as well as the depth and material of the casing in which the speaker cone is embedded greatly affects the frequency response of that speaker.

The size of a speaker and the shape affect the frequencies that it can reproduce.

A smaller speaker will have difficulty reproducing lower frequencies, whereas a larger speaker will have difficulty reproducing higher frequencies. This is why subwoofers are larger and tweeters are smaller.

This same concept applies to earbuds. Because the speakers are so much smaller, they can’t reproduce lower frequencies , but can more easily produce higher frequencies.

Smaller speakers can more easily reproduce higher frequencies.

Furthermore, the distance of a speaker from the listener affects how the frequencies can be perceived. For example, have you ever heard a hip-hop track being played from a car speaker system and wondered why the low frequencies were so loud?

Listening to hip-hop or dance music in a car often means those outside the car will head the low-end more than you.

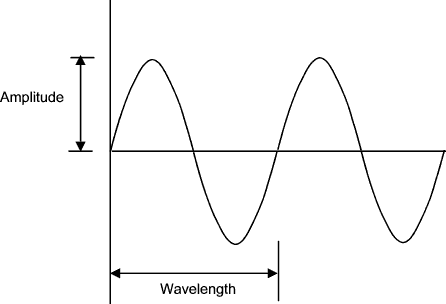

The reason is, low frequencies sound waves are much longer and take a greater amount of space to travel in order to be perceived. A 100Hz wave needs about 11.3 feet to travel before it can be heard.

This would explain why subsonic frequencies sound so loud when played from someone else’s car speaker system - because the frequencies can’t be heard by the driver, they turn up the low end to attempt to hear them. But from 10, 20 or 30 feet away, these frequencies are more than perceivable.

Lower frequencies take much longer distances to travel than higher frequencies.

If you’d like to find out how long it takes for a sound wave to travel before it can be heard, here's a simple formula:

The speed of sound is 1130 feet per second. Divide the speed of sound in feet by the frequency in Hertz.

- Ex. 1130/100 = 11.3 ft.

- Ex. 1130/2000 = .565 ft.

So a 100Hz sound wave is 11.3 feet long, and a 2kHz sound wave is .565 feet long.

But to get back on track, we can see how these changes have affected our listening experience. We’re no longer listening to music that can be faithfully reproduced. As we’re about to see, this has affected the processing used when mastering music.

How Headphones are Affecting Mastering

As we’ve just discussed, headphones are changing how music is listened to by affecting what frequencies can be heard and the reverberation of the sound source.

But how does this affect the mastering process?

As you might imagine, a mastering engineer will need to compensate for some of the shortcomings, and even some of the strengths of small speaker systems, aka earbuds.

Because small speakers cannot reproduce low frequencies, nor do they allow for the space needed for a low-frequency wave to travel, some creative forms of processing will need to be introduced.

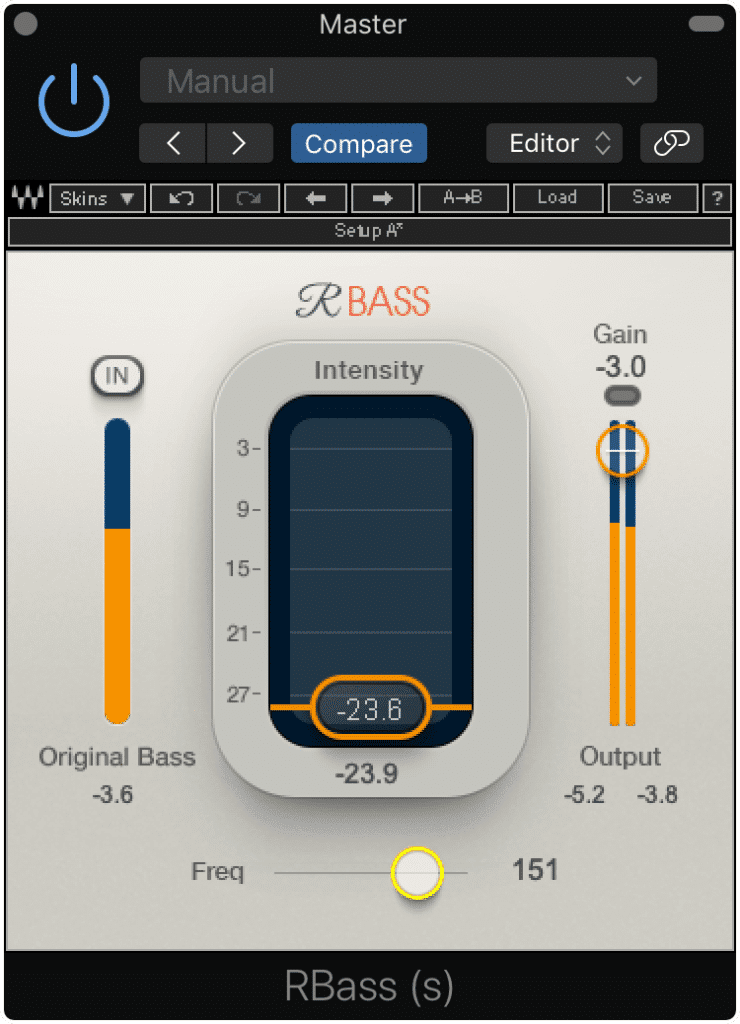

The RBass plugin creates low-order harmonics directly above the lowest fundamental.

This processing typically comes in the form of psychoacoustic effects. The primary one being low order harmonic generation of the lower bass frequencies.

It's important to note that the harmonics or overtones of a sound make the fundamental frequency more easily perceived. So for example, if the fundamental is 100Hz, harmonics at 200Hz and 300Hz make that fundamental more perceivable.

If the 100Hz fundamental was not present in the above example, it could still be heard due to the phantom fundamental effect.

But what’s pretty amazing is that these harmonics can cause the perception of a fundamental even when that fundamental is not present. This effect, known as the phantom fundamental effect , means that harmonics can be used to create the perception of low frequencies even when they aren’t there, or in the case of earbuds, can’t be reproduced.

Earbuds cannot fully reproduce low frequencies.

So if earbuds cannot reproduce low frequencies, harmonics can be used to create the perception of them. This technique has become increasingly popular , with many plugins being designed specifically for low-frequency harmonic generation.

If you find psychoacoustic effects interesting, check out our blog post that details some more of them, and how they can be used when mixing and mastering:

In discusses the Phantom effect in greater detail as well as some other useful psychoacoustic techniques.

Additionally, because earbuds are better at producing higher frequencies, the high-frequency range will need to be carefully monitored and controlled to avoid any unpleasantness.

Using earbuds may mean being able to hear the high-frequency range better. This can be problematic if a master contains excessively loud high frequencies or sibilance.

For example, the sibilance or “ess” sound of a vocal can become excessive very quickly. When you couple the unpleasantness of sibilance with earbud’s ability to reproduce high frequencies, you can see why sibilance can quickly become an issue.

Furthermore, since earbuds don’t allow for reverberation to disperse higher frequencies, sibilance can become increasingly shrill. This gives a mastering engineer even more reason to control sibilance and the high-frequency spectrum.

If you have a mix that needs to be mastered with headphones in mind, send it to us here:

We’ll master it for you and send you a free mastered sample of your mix.

How Headphones are Affecting Mixing and Tracking

Mastering isn’t the only audio production process being affected by the popularity of headphones. In fact, both mixing and tracking are changing in unique and unexpected ways.

The popular use of headphones is also affect mixing and tracking.

Just like with mastering, the same issues discussed earlier need to be addressed during the mixing process. In other words, the fact that earbuds cannot reproduce low-frequency information results in psychoacoustic effects being used.

Similarly, sibilance needs to be carefully controlled in order to cut down on any shrillness in the mix.

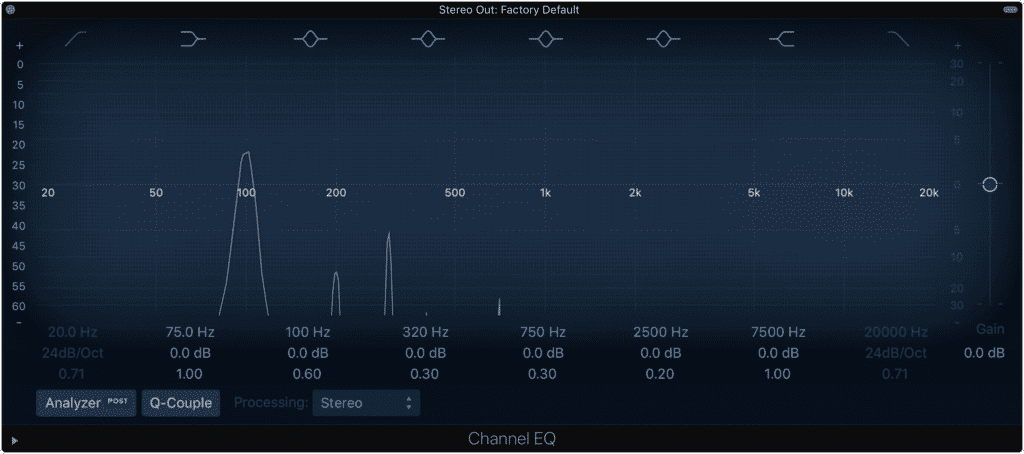

Because reflections and reverberation are not being added during the playback process, additional reverb will need to be recorded or added as an effect during both tracking and mixing.

Additional reverb may be introduced to compensate for the lack of room reflections during record playback.

This means that reverb times of roughly .6 seconds to 1.2 seconds will be implemented more often to accommodate complex information and to simulate the effect of an acoustically pleasing room.

With this added reverb based processing one would expect even more reverb to be used on vocals and instruments; however, popular music has seemed to take a different route.

Perhaps it's the intimate nature of listening to an artist over headphones, or the close sound headphones provide that has helped to popularize this technique, but whatever it may be, more and more vocals are being processed with less reverberation, closer micing techniques, and with softer, almost whisper-like performances.

Whereas pop music used to include anthemic reverb processing, the pop music of today is dry, upfront, and incredibly detailed.

Pop artists like Billie Eilish have developed an upfront and personal vocal styling, perhaps due in part to headphone use.

For example, if you listen to Billie Eilish’s pop hit ‘Bad Guy’ you’ll notice immediately how close the vocals sound. Not only were they processed with minimal reverberation, but they also exhibited an incredibly close micing technique , in which every detail of the vocal performance can be perceived.

Although this photo is an exaggeration, many vocals are being recorded using a very close-mic technique.

Again, the reasoning behind this change is not completely known, but it can be determined to a reasonable degree of certainty that headphones, and how they affect music listening both sonically and emotionally has in part led to this change in vocal styling.

What Headphone Use Isn’t Changing

As we’ve discussed, headphone use is changing the frequency response and effects processing of both mixing and mastering, as well as performance and tracking techniques. But there is one thing, in particular, that should have changed with headphone use yet simply isn’t.

Headphones allow music to be more easily perceived, as they cancel out background noise.

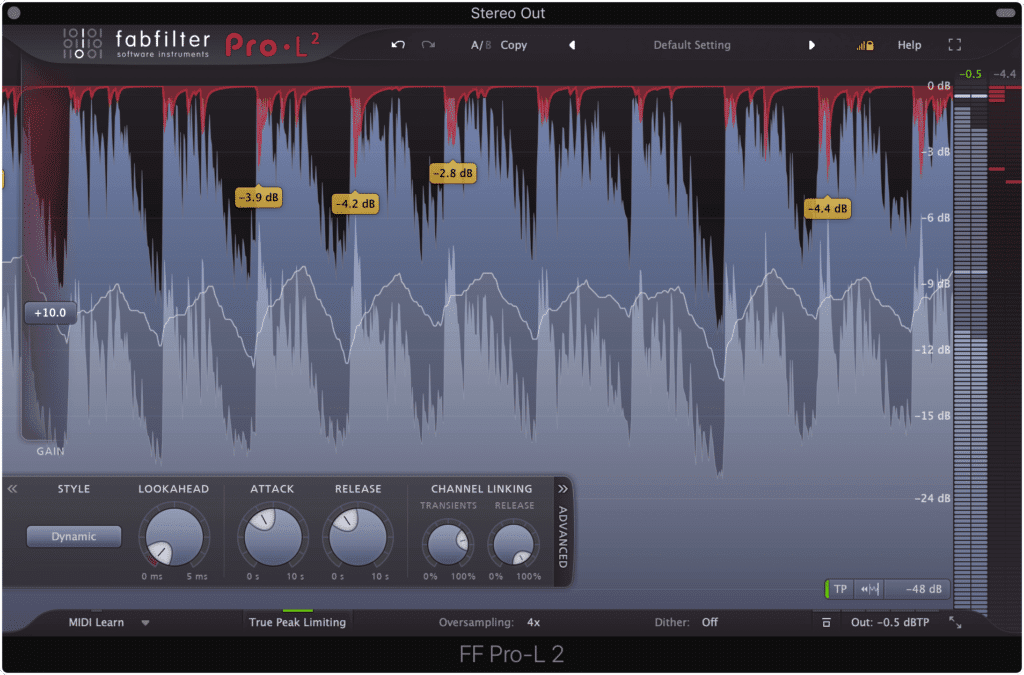

Although headphones allow music to be more easily perceived, both by canceling potentially destructive room acoustics and outside interference**, masters have not become quieter as a result.**

Despite making music easier to hear, headphones have not caused masters to become quieter.

In fact, the only reason masters are beginning to be made quieter is due to the use of loudness normalization prior to streaming.

If you’d like to learn more about loudness normalization and how it affects the listening experience, you can learn more about it here:

It covers loudness normalization in greater detail.

So why haven’t headphones and their use made music quieter?

The main reason is unknown, but perhaps the fact that listeners have become used to hearing compressed, dynamically limited music is playing a part.

Music has been mastered loudly for nearly two decades, so perhaps it will take some time for things to be turned back down.

Additionally, loud is often perceived as sounding better so this may just be a crutch that has been carried over from a previous era of music production.

Regardless of why masters are still being made loud, hopefully, the ease of hearing music that headphones provide will eventually cause music to be made more dynamic and at a lower overall loudness.

The Future of Headphones

If anything has been made clear by the popularization of headphones, its that the changing of the technology used to create and proliferate music changes the music itself. In other words, the tools we use to listen to and create music have the power the change that music.

The technology we use to create and listen to music affects how music is made.

With that said, it only makes sense that we consider how music listening technology will change if we expect to stay up to date on music production.

Customizable earbuds block out more sound than traditional earbuds.

Although it can’t be said for certain what will be invented, one particularly interesting possibility is the advent of affordable customizable earbuds. Currently, earbuds can be molded to the shape of the listener’s ears, just not affordably.

Whenever custom earbuds become affordable, they will no doubt affect how music is produced and mastered.

But as soon as this type of technology becomes available to more music lovers, it will further change how music is listened to and the production behind that music.

So let’s say that the newest Apple Earbud provided with the latest iPhone will be molded to the listener’s ear . This means that almost the entirety of outside noise would be blocked out whenever listening to music.

If Apple was to release a customized earbud, this would popularize in-ears, and in turn, change the sound of music for many people.

In turn, music would be more easily perceived and could be made much quieter - resulting in more dynamic music production.

But let’s take this one step further and say that Apple provides a listening test that when taken determines which frequencies your ears are more sensitive to, and then automatically tailors your listening experience to what would sound best for you.

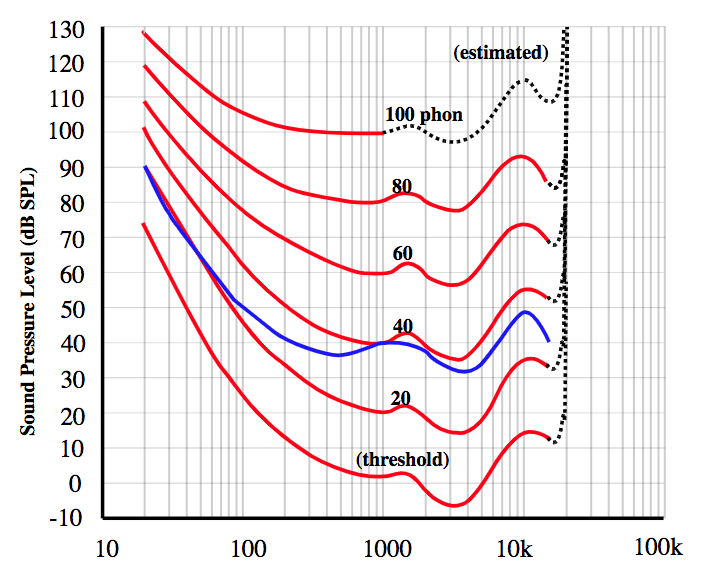

If modeled after the Fletcher-Munson curve, music made for these earbuds would sound different and be processed differently for each unique listener.

Practically speaking, Apple could measure the Fletcher-Munson curve of your hearing using these earbuds, and then implement a frequency curve to create a more balanced frequency response.

This type of post-production processing would no doubt affect how mastering was conducted, as it would almost negate the frequency response established during mastering.

Now, this is just one example of how earbuds may change and how these changes can affect audio production, but it shows how these changes need to be kept in mind when creating music.

If you’d like to learn more about the Fletcher-Munson curve and what it means for your unique perception of music, check out our blog post on noise shaping:

In it, you’ll find a comprehensive section on the Fletcher-Munson curve, as well as helpful information on noise shaping and dithering.

Conclusion

Headphones and earbuds have cemented themselves as the new way to listen to music. Not only are they changing the emotional context of music, but they’re also affecting how music is created, performed, and engineered.

From tracking to mixing and mastering, the popularization of earbuds over loudspeakers is quickly changing the production process and causing engineers to consider how their masters will sound of the popular listening equipment of the time.

Whether you are an engineer or an artist, understanding how headphones are changing music is crucial to creating music that sounds great to modern audiences.

If you want your music mastered by engineers that understand how to make music sound great on today’s most popular listening devices, send us one of your mixes here:

We’ll master it for you and send you a free mastered sample.

Have earbuds changed how you listen to or create music?